publications

2025

-

InterAct: Advancing Large-Scale Versatile 3D Human-Object Interaction GenerationIn CVPR, 2025

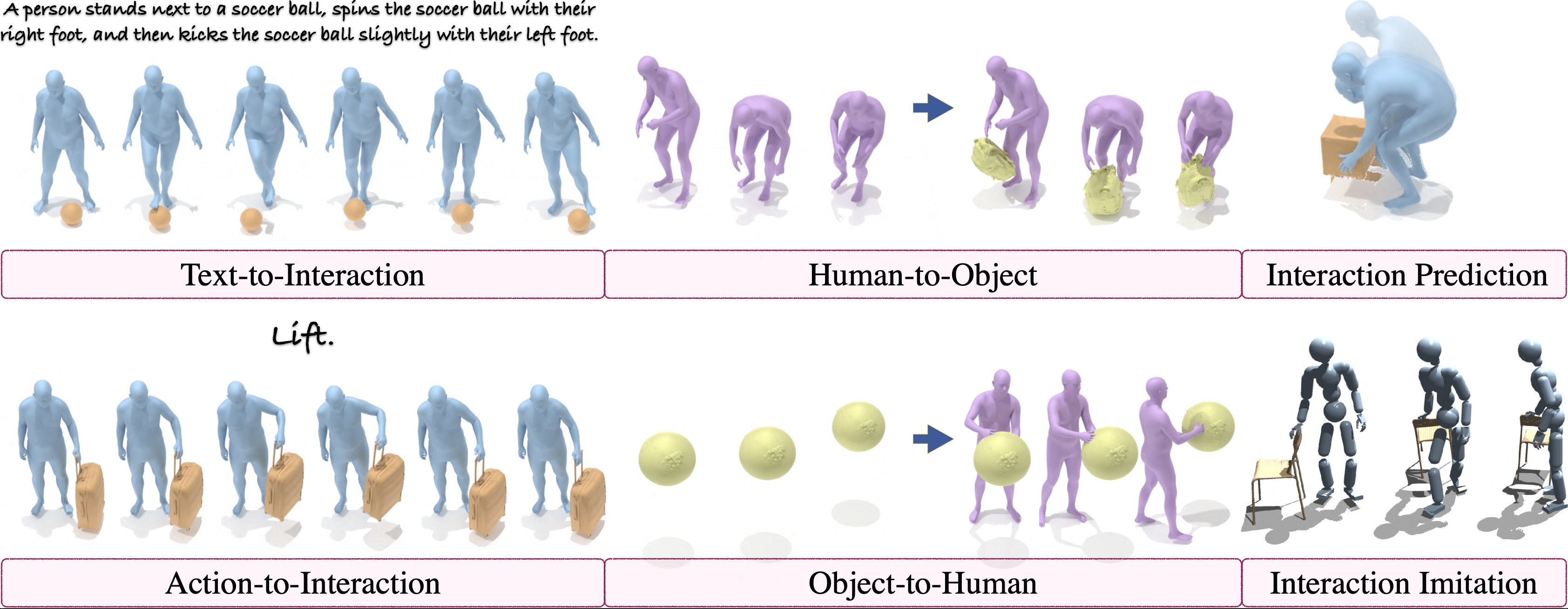

InterAct: Advancing Large-Scale Versatile 3D Human-Object Interaction GenerationIn CVPR, 2025While large-scale human motion capture datasets have advanced human motion generation, modeling and generating dynamic 3D human-object interactions (HOIs) remains challenging due to dataset limitations. These datasets often lack extensive, high-quality text-interaction pair data and exhibit artifacts such as contact penetration, floating, and incorrect hand motions. To address these issues, we introduce InterAct, a large-scale 3D HOI benchmark with key contributions in both dataset and methodology. First, we consolidate 21.81 hours of HOI data from diverse sources, standardizing and enriching them with detailed textual annotations. Second, we propose a unified optimization framework that enhances data quality by minimizing artifacts and restoring hand motions. Leveraging the insight of contact invariance, we preserve human-object relationships while introducing motion variations, thereby expanding the dataset to 30.70 hours. Third, we introduce six tasks to benchmark existing methods and develop a unified HOI generative model based on multi-task learning that achieves state-of-the-art results. Extensive experiments validate the utility of our dataset as a foundational resource for advancing 3D human-object interaction generation. The dataset will be publicly accessible to support further research in the field.

@inproceedings{xu2025interact, title = {InterAct: Advancing Large-Scale Versatile 3D Human-Object Interaction Generation}, author = {Xu, Sirui and Li, Dongting and Zhang, Yucheng and Xu, Xiyan and Long, Qi and Wang, Ziyin and Lu, Yunzhi and Dong, Shuchang and Jiang, Hezi and Gupta, Akshat and Wang, Yu-Xiong and Gui, Liang-Yan}, booktitle = {CVPR}, year = {2025}, }

2024

- Oral

Audio-LLM: Activating the Capabilities of Large Language Models to Comprehend Audio DataDongting Li, Chenchong Tang, and Han LiuIn Advances in Neural Networks – ISNN, 2024

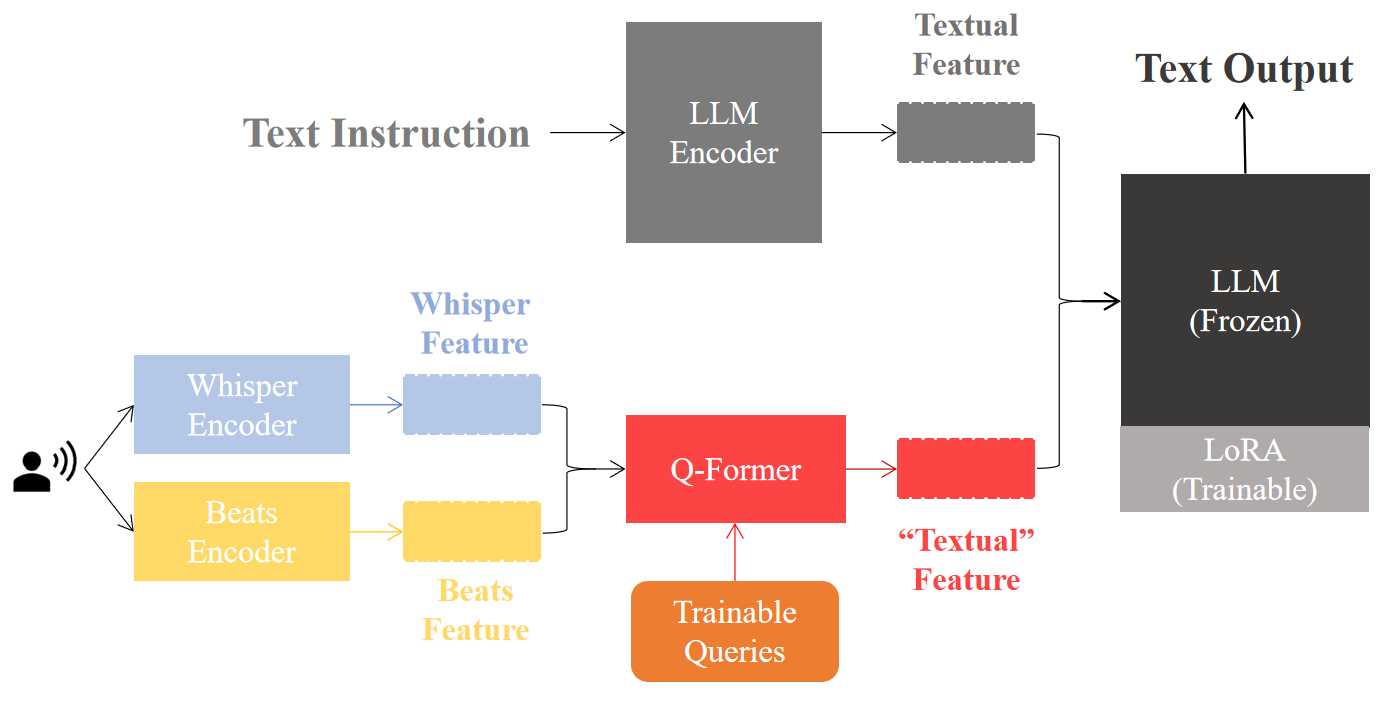

Audio-LLM: Activating the Capabilities of Large Language Models to Comprehend Audio DataDongting Li, Chenchong Tang, and Han LiuIn Advances in Neural Networks – ISNN, 2024We introduce Audio-LLM, a large language model that improves audio question-answering (AQA) systems and activates the capabilities of large language models to comprehend audio data. Our task entails introducing an encoding method that effectively transforms audio data into embedded representations, enabling LLMs to comprehend and process the information contained within the audio. By undergoing a series of fine-tuning stages, we establish alignment between audio and text, allowing LLMs to leverage both auditory and textual prompts. This alignment enables the model to achieve remarkable performance in automatic speech recognition (ASR), emotion recognition (ER), English-to-Chinese translation (En2Zh), music captioning (MC), and so on, demonstrating its versatility across various downstream applications. In addition, our model can be trained efficiently. During training, we only need to update approximately 20 million parameters, which represent about 0.27% of the entire Audio-LLM model. Furthermore, the discussion part highlights the model’s adaptability to zero-shot tasks, positioning Audio-LLM as a significant advancement with far-reaching implications for generalized hearing AI.

@inproceedings{li2024audiollm, author = {Li, Dongting and Tang, Chenchong and Liu, Han}, title = {Audio-LLM: Activating the Capabilities of Large Language Models to Comprehend Audio Data}, booktitle = {Advances in Neural Networks -- ISNN}, year = {2024}, }